Introduction

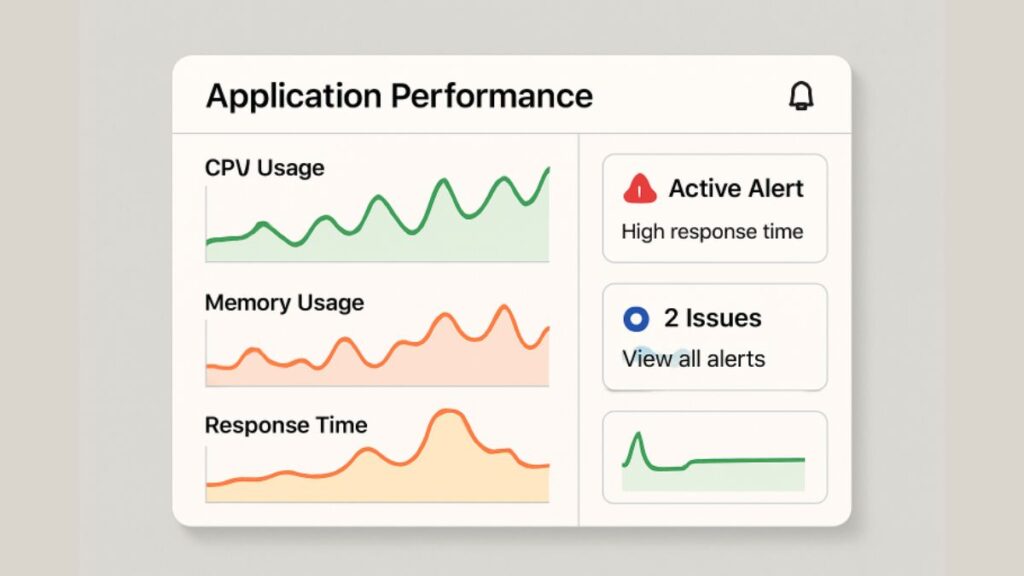

Maintaining reliable application performance is vital in today’s digital-driven environment, where even brief disruptions can result in user frustration and financial loss. While traditional monitoring tools often detect issues only after they arise, organizations increasingly need adaptive strategies that provide real-time insights and faster resolutions. Modern approaches to application performance monitoring allow teams to anticipate challenges, improve responsiveness, and ensure smooth user experiences. To learn more about advanced tools that support this goal, visit https://www.eginnovations.com/product/application-performance-monitoring for details on tracking and optimizing essential applications.

Modern monitoring practices focus on proactively identifying and mitigating performance bottlenecks, leveraging automation and analytics. This transition from reactive to intelligent monitoring enables organizations to resolve issues swiftly before users notice them. As architectures grow more complex, so does the need for advanced techniques, ranging from dynamic sampling to synthetic user testing. By integrating these techniques into daily workflows, organizations set the foundation for consistent reliability and customer satisfaction.

Performance monitoring is also closely tied to overall business outcomes. Unexpected slowdowns can disrupt service delivery and cause customers to abandon sites or apps. That’s why it’s crucial to adopt monitoring solutions that capture meaningful data without overwhelming your infrastructure or teams.

This guide examines more innovative ways to monitor application performance, offering best practices and actionable strategies for modern IT and DevOps teams seeking sustainable growth and operational excellence.

Adaptive Sampling for Efficient Monitoring

Comprehensive data collection can significantly impact application performance, especially under heavy traffic. Adaptive sampling is a more innovative approach that dynamically adjusts how much data is collected, depending on the system’s state and current workload. This technique ensures you always gather enough insights to detect anomalies without compromising efficiency by overloading your system with metrics.

For example, an adaptive system might increase the sampling rate during peak hours to catch transient issues. Conversely, during periods of low activity, it reduces data capture to save resources. This balance keeps application monitoring lightweight yet informative, leading to fewer false positives and more actionable insights. For further reading, visit The New Era Of Compute: How AI Impacts Observability.

Utilizing Microbenchmark Suites

Microbenchmarking is a focused approach to performance testing, running small, repeatable tests on critical application components before deployment. Unlike full-scale benchmarks, microbenchmark suites target specific functions or modules, revealing subtle regressions that might go unnoticed in larger tests. Integrating microbenchmarks into the quality assurance process offers early detection and resolution of potential performance drifts.

Automated microbenchmarks help QA and development teams spot performance discrepancies as soon as new code is committed. This agility accelerates the feedback loop, shortening response times to performance threats and reducing the likelihood of production-related incidents.

Advanced Debugging Strategies

Modern applications increasingly rely on microservice architectures, in-memory databases, and distributed systems, which, while enhancing scalability and performance, also add significant complexity to debugging. Traditional methods like step-through debugging or static log analysis often fail to capture the whole picture, as issues may span multiple services, environments, or data layers. Advanced debugging strategies address this challenge by incorporating transparent user-level tracing, which maps execution paths across interconnected services to highlight where failures or delays occur. In addition, multi-level abstraction models combine insights from system resources, application processes, and user-level interactions, enabling a holistic view of performance bottlenecks. By correlating these layers, developers can isolate root causes more quickly and maintain reliability in highly dynamic, distributed environments.

When and Why to Use Advanced Debugging

Advanced debugging is crucial when diagnosing intermittent slowdowns, elusive memory leaks, or performance degradation in specific user scenarios. Using advanced visualization tools and correlation models, engineers can quickly isolate high-latency paths or misbehaving microservices for targeted optimization. More on debugging approaches can be found at Effective AI Decision Making Starts With Visibility And Trust.

Proactive Monitoring with Synthetic Monitoring

Synthetic monitoring empowers organizations to be proactive by simulating typical user interactions with the application using automated scripts. By continuously running these scripts, teams can track response times and availability, quickly identifying performance problems, uptime issues, or broken workflows—even before users encounter them. This method is invaluable during off-peak hours or after new deployments.

Unlike real-user monitoring, which waits for users to trigger an issue, synthetic monitoring acts as an always-on test, providing round-the-clock confidence in application reliability. This minimizes downtime and helps meet stringent service-level agreements (SLAs).

Integrating Real-Time Monitoring in DevOps

DevOps practitioners require real-time actionable data to resolve issues rapidly and ensure service continuity. Integrating real-time monitoring tools like centralized logging solutions and automated data pipelines facilitates quick root-cause analysis and informed decision-making. Solutions like the ELK Stack (Elasticsearch, Logstash, Kibana) or Splunk aggregate and visualize logs, metrics, and traces across your ecosystem, making system-wide insights easily accessible.

Seamlessly embedding monitoring into continuous integration and continuous delivery (CI/CD) pipelines creates a culture of accountability, drives faster remediation, and ensures that performance is a core tenet of your software lifecycle.

Conclusion

Embracing more intelligent application performance monitoring allows organizations to stay ahead in an increasingly digital marketplace. Instead of waiting for disruptions to occur, businesses can proactively identify and address potential bottlenecks before they impact users. These tools provide complete visibility into complex, interconnected systems, eliminating blind spots that traditional monitoring often misses. By leveraging predictive analytics, machine learning, and real-time data, IT teams can maintain seamless application performance and respond quickly to anomalies. This not only enhances the user experience but also helps build trust and loyalty among customers. Moreover, aligning IT performance with broader business goals ensures that applications remain agile, efficient, and capable of supporting growth. Intelligent monitoring transforms application management into a strategic advantage, driving resilience, innovation, and measurable business outcomes.